Good Charts, Wrong Data: A Data Sanity Check Framework for Data Visualizers

A framework and guidelines to identify data-reality gaps

This is post #2 of my VisThink series on “Thinking Effectively with Data Visualization.” All the posts in the series are collected here: 👉 VisThink Series.

The series mirrors the main elements that I teach in my online live course, “Thinking Effectively with Data Visualization.” The course helps you gain confidence in your data thinking and avoid costly mistakes. You can watch the first lecture for free by following this link: 👉 Introduction to VisThink. If you are interested in the course, see the details on the course webpage.

One fundamental notion that is often overlooked in data visualization practice is that no amount of design or data processing skills can overcome problems inherent in the data due to the way it was generated and collected. This is a very apt case for the classic statement, “garbage in, garbage out.” I am sure that while you read this, you may find my point obvious and ask, “Enrico, of course I know that bad data leads to bad charts!” Yeah … maybe. But how do you know when you have bad data? How do you judge if a number, for example, represents the thing you think it represents? Do you have a systematic procedure, or do you go by intuition?

In this post, I will share a framework I have developed to think systematically about evaluating your data, so that you can be more mindful of the impact it can have on the validity of your visualizations.

Validity?

Before we move forward, let’s clarify what we mean by validity. If you think about it, a visualization is not inherently valid or invalid until some information is extracted from it. In other words, the thing that is valid or invalid is the information extracted from the visual representation and the data it represents. With visualization, we often have two main situations. When we are producers, we interpret the content and generate our own understanding of the facts depicted by the representation. When we are consumers, we are presented with interpretations of facts that someone else has generated for us (e.g., news media, scientific reports, presentations). In both cases, the output of interacting with visualizations is a series of facts about the world that we, or somebody else, extracted from the data. The relevant issue here is whether these facts constitute a valid inference from the data and the representation or not. Let me provide you with an example to make this concept more concrete before I proceed with more details. The map below shows the total number of vehicle collisions in New York City by zip code. (This is an example I have used before in my newsletter - please bear with me.)

This representation, without a specific interpretation (or extracted message, if you will) is not inherently valid or invalid. It becomes valid or invalid only when paired up with a specific interpretation. Contrast these two possible titles for the same map.

Title 1: “Collision Hot Spots: Busy Areas Experience Many Collisions”

Title 2: “Collision Hot Spots: Dangerous Areas to Drive in NYC”

While Title 1 is a legitimate interpretation of the map Title 2 is not. Areas with more collisions (the darker spots) are not necessarily areas where driving is more dangerous, but just areas with more cars. Therefore, the first title is ok, the second is not.

Data Validity

When we derive claims from data visualizations, their validity depends on many aspects. First, the reality that the data represents, namely, the real-world phenomena. Second, the extent to which the data and calculations represent the concepts one uses in their argument. Third, the correctness of the claims with respect to what the visualization shows, that is, whether the visualization actually depicts what is claimed. The first element depends on your knowledge of the domain problem. The second depends on your knowledge of the data and its meaning (and limitations). The third depends on your ability to read data visualizations skillfully and correctly (e.g., your “data visualization literacy.”) Of these three elements, here we focus on your ability to understand the data and identify its limitations.

How do you assess the validity of your data? More precisely, how do you assess the ability of your data to support the claims you derive from it?

To guide you through this task, I have developed a framework that organizes the problems in stages, allowing you to think about data validity more systematically.

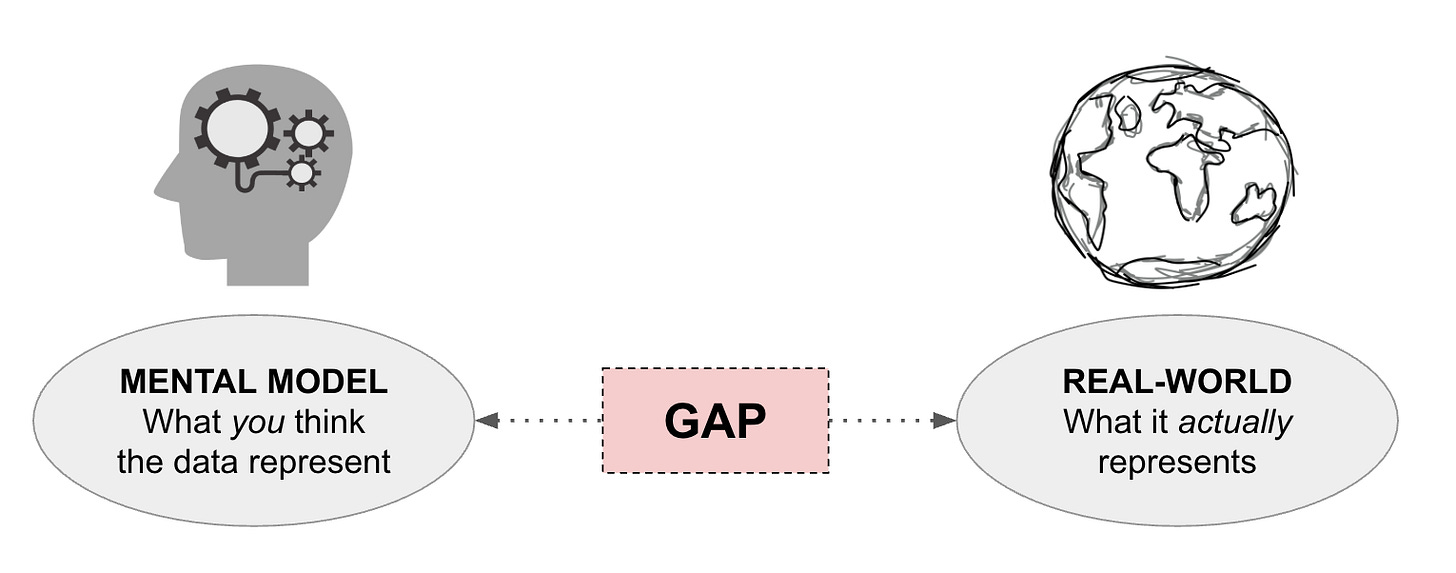

Data-Reality Gaps

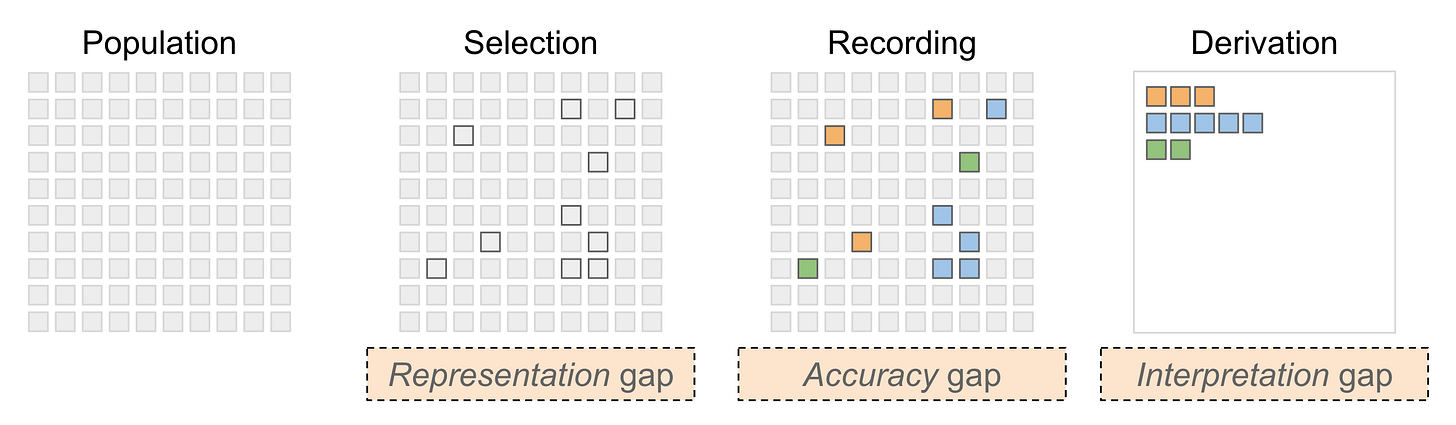

The framework is depicted in the diagram below, which shows an idealized version of how data is generated. The first element on the left is the “population.” This term is commonly used in statistics to define the idealized set of possible elements that one could pick from to obtain a complete set of measurements. For example, the whole population of a country would be the population, literally, for a data set collecting survey data about a given topic. However, the term population does not need to refer to people only; it can refer to any type of entity. For example, if we want to measure the temperature around the globe, all possible locations would be represented in the population of that dataset.

Every data set draws items from the population through some selection mechanism, which may be more or less explicit. The way this selection is made has a huge impact on what inferences we can draw from the data. Aside from the obvious fact that measuring anything from a subset introduces some uncertainty regarding the accuracy of a given value, the procedures used to derive a dataset can also introduce significant distortions and gaps between what we think the data represents and what it actually represents. In other words, if we are not aware of the difference between what we aim to study and what the data contains, we can easily be led astray. If a mismatch exists between the idealized population and the data, we have what I call a “Representation Gap,” which is the situation where the data does not accurately represent what we assume it does.

Conceptually, however, this is not the only problem that can exist in a data set. A second logical step after selecting the elements drawn from our hypothetical population is to measure values from the selected items. However, measurement procedures can be faulty in numerous ways, resulting in a second gap: the “Accuracy Gap,” which occurs when the values do not accurately represent the measured reality. A good example is a sensor that is malfunctioning or influenced by environmental factors we are unaware of. Another example is the mismatch between what people respond to when they are administered a survey and what they actually believe.

Finally, data is rarely distributed in the format in which it was collected. Most often, data is pre-processed and transformed to produce the final dataset we use for our analysis. This final step, however, creates another level of indirection that often leads to misinterpretation. I call this third gap the “Interpretation Gap,” because its main manifestation is a misinterpretation of the real meaning of the values (often individual metrics) the data contains. The best example of this problem is what I like to call “black-box numbers,” individual numbers obtained through complex calculations and that are (often too casually) used as a faithful representation of the phenomenon they purport to depict. For example, what we commonly call “inflation” is based on the Consumer Price Index, which aggregates the prices of thousands of goods and services into a single number, based on the spending pattern of an “average consumer.” If prices increase rapidly only for a specific set of goods, such as housing and food, the entire CPI may not accurately represent the burden that specific segments of the population experience.

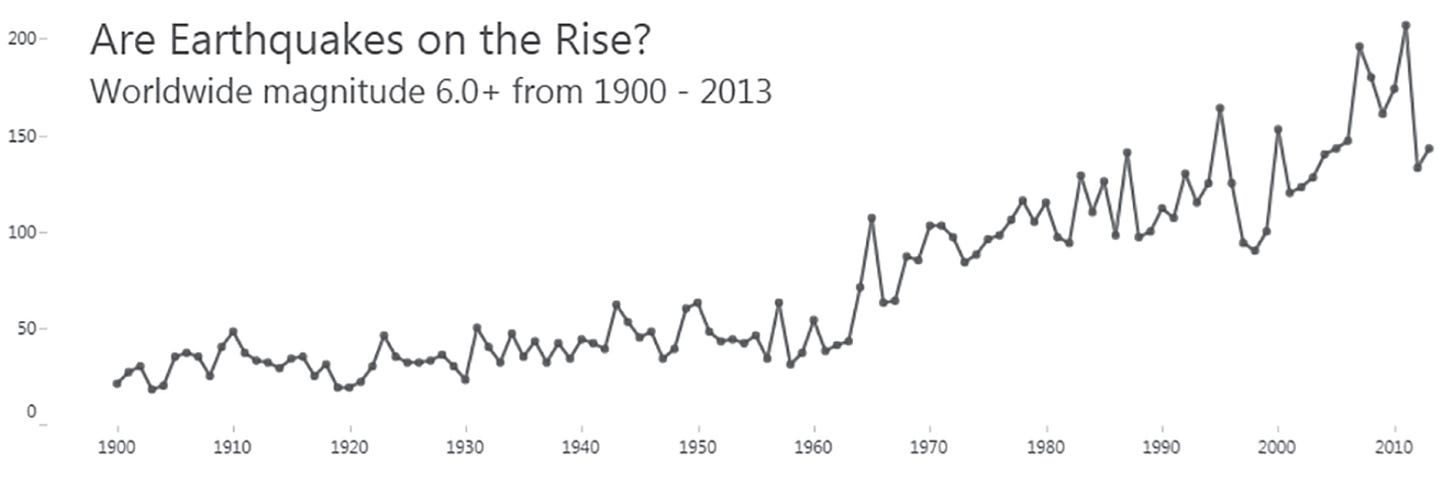

One additional element to consider, in addition to the three gaps, is the “Consistency Gap.” This gap stems from the fact that often data is collected over time and across multiple sources. When relevant differences in the way data is collected or processed exist between different sources or time spans, major misinterpretations can occur. A good example, borrowed from Ben Jones’ book “Avoiding Data Pitfalls,” from which I have also borrowed the term “data-reality gaps,” is this one about earthquakes.

Earthquakes are not really on the rise. What changed is the precision of the instruments used to detect the earthquakes, causing this apparent upward trend. If one is not careful about consistency, apparent trends can easily lead to faulty interpretations.

Performing Data Sanity Checks

The little framework I presented above can be used in practice to perform a sanity check before you start manipulating and visualizing your data. I suggest you ask yourself four main questions:

Selection: What is included/excluded? Do the included elements match my mental model of the the reality I intend to depict?

Recording: Are the values recorded in the data realiable and accurate. Are there ways in which the recording process may have introduced distortions and inaccuracies?

Derivation: Are the derived values valid? Do they capture what they claim to capture? And to they represent the concepts I intend to depict?

Consistency: Does any of the above change over time or space (or source)? Is it possible that different sources or time periods used different data collection standards?

While this does not ensure you catch every possible limitation in your data, it goes a long way in helping you avoid major flaws and interpretation errors.

One big topic I did not cover here, which should also be taken into consideration, is the effect of missing data. Missing values can also wreak havoc in your data analysis and visualization if you are not very careful in detecting and handling them. I will cover this problem in one of the future posts of the series.

That’s all for now. Let me know if this framework is useful to you! Plese leave your comments below and share it with others who may be interested in these ideas! Thanks! 🙏